The web has made it easy to share your passion with others. Whether you collect PEZ® dispensers, Lego® minifigs, or photos of famous people’s graves, the advent of the web has been a boon for enthusiasts of all sorts because publishing content requires less time and technical knowledge than it used to.

Add to that the awesome power of database-driven content management systems and web frameworks (for the more technically-inclined) and all of a sudden, you have the opportunity to catalog, organize, and display hundreds if not thousands of images, videos, and audio files for your own pleasure and that of others like you. Of course, as with physical collections, it’s quite easy for digital collections to get out of control, and moreover, for pages displaying said collections to become enormous and take a long time to access. Optimization of web pages is key for increasing access to such a collection—or really any page for that matter. For the purposes of this article, I’d like to focus on large collection-type pages.

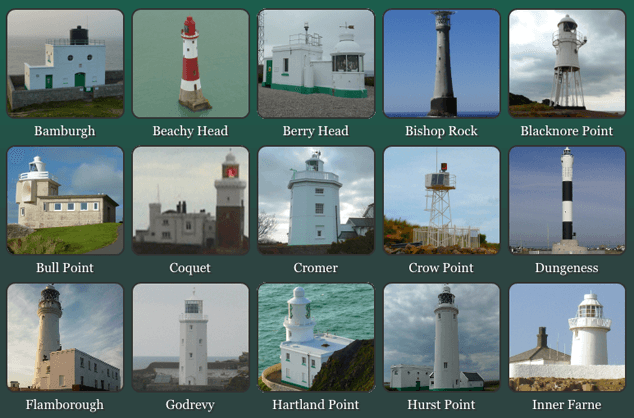

To get a sense of the type of page I’m describing, take a look at this collection of English lighthouses. Pretty impressive, right? Worldwide Lighthouses asked us to help them with the performance of their pages which, unsurprisingly, take a bit of time to load. Thankfully, by following a few basic guidelines, many of which were outlined by Steve Souders in Yahoo!’s YSlow Performance Rules, we can get this site to load much more quickly.

Combine!

One of the easiest ways to improve the speed of your site is by combining asset files, such as CSS and JavaScript. Every asset file that is referenced in your documents requires the browser to make a request to the server so it can be retrieved, and each request takes time: precious time, time your users won’t get back.

Taking a look at the HTML of the English Lighthouses page, we can see that the author is using three stylesheets and three JavaScript files:

<link rel="stylesheet" href="http://www.worldwidelighthouses.com/Page-Layout.css" media="screen and (min-width: 481px)">

<link rel="stylesheet" href="http://www.worldwidelighthouses.com/Mobile-Page-Layout.css" media="only screen and (max-width:480px)">

<link rel="stylesheet" href="http://www.worldwidelighthouses.com/js/Image-Viewer/jquery.fancybox-1.3.4.css" media="screen and (min-width: 481px)">

<script src="http://www.worldwidelighthouses.com/js/jquery-1.4.3.min.js"></script>

<script src="http://www.worldwidelighthouses.com/js/Image-Viewer/jquery.fancybox-1.3.4.pack.js"></script>

<script src="http://www.worldwidelighthouses.com/js/customTransition.js"></script>Each of these stylesheets requires a browser that comprehends media queries in order to access the styles (which is another issue altogether), but let’s sidestep that and focus on reducing the number of asset files.

Starting with the styles, those three individual server requests could be reduced to one by “munging” (or combining) them into a single file, using @media declarations to maintain stylistic separation:

@media screen and (min-width: 481px) {

/* contents of Page-Layout.css and jquery.fancybox-1.3.4.css can go here */

}

@media only screen and (max-width:480px) {

/* contents of Mobile-Page-Layout.css go here */

}Some of you may be wondering why I recommend @media over @import. The answer is simple: @import still requires the download of additional CSS files and fewer requests means better performance.

Returning to the markup, if we switch the asset paths for this new, single CSS file and the JavaScript files from absolute to site root relative URLs (i.e. URLs that start with a leading slash), we can further reduce the size of the HTML file:

<link rel="stylesheet" href="/main.css" media="all">

<script src="/js/jquery-1.4.3.min.js"></script>

<script src="/js/Image-Viewer/jquery.fancybox-1.3.4.pack.js"></script>

<script src="/js/customTransition.js"></script>Next up is the JavaScript. As with the CSS, the JavaScript can be combined to reduce the number of requests to the server. If you decide to munge JavaScript files manually, it’s usually recommended that you comment your code well so you know what each piece is when you need to edit the file later. Here’s an example:

/*

* jQuery JavaScript Library v1.4.3

… remainder of comment and code … */

/*

* FancyBox - jQuery Plugin

… remainder of comment and code … */

/*

* My custom JS code

… remainder of comment and code … */Switching to a single combined JavaScript file for these three scripts would reduce the number of server requests (and the markup), which is pretty sweet:

<link rel="stylesheet" href="/main.css" media="all">

<script src="/js/main.js"></script>

Combining this code to minimize requests can be a balancing act, in which caching and “last-modified” dates on files play a role as well. Your site’s custom code may be changing quite often, and if that is the case, you may want to keep the relatively static JavaScript code from 3rd party libraries in one location so that it doesn’t have to be re-downloaded whenever you tweak your custom code. It would require more server requests, but may end up serving your users better on the whole. And if the library files are already cached, only the headers will be re-downloaded.

Of course, since many web sites probably use the same libraries as your site, you might want to use that to your advantage. JQuery, for example, is a very popular library and you might get better performance if you include it from an external source, such as the Google Libraries API and accompanying Content Delivery Network (CDN). Doing so can have two positive effects on your load speed: 1) chances are pretty good that Google’s servers are a helluvalot faster than anything you can afford, so your users will be delivered the file quickly; 2) with a number of sites relying on that same CDN source file for jQuery, there’s an excellent chance your user’s browser may already have the file cached, in which case it won’t be downloaded again for use on your site.

Here’s how that might look:

<link rel="stylesheet" href="/main.css" media="all">

<script src="//ajax.googleapis.com/ajax/libs/jquery/1.4.3/jquery.min.js"></script>

<script src="/js/main.js"></script>Also, if you’re worried about the rare chance that Google’s CDN is down, you can test whether or not jQuery was downloaded successfully, and, if not, fall back to linking to a local copy of the file (code pattern courtesy of the HTML5 Boilerplate project):

<link rel="stylesheet" href="/main.css" media="all">

<script src="//ajax.googleapis.com/ajax/libs/jquery/1.4.3/jquery.js"></script>

<script>window.jQuery || document.write("<script src='/js/jquery-1.4.3.min.js'>\x3C/script>")</script>

<script src="/js/main.js"></script>Reorganize!

Code organization can be as important to your page’s performance as how many script and style tags are in your page to begin with. As browsers are parsing an HTML file, they stop the processing and rendering of the page when they encounter style or script elements.

In the case of CSS, browsers wait until they have downloaded all style rules before proceeding to render the page; including them in the head of the document ensures your pages begin rendering quickly. Thankfully, multiple CSS files can also be downloaded simultaneously, further speeding up the process.

The browser stops rendering when loading JavaScript files as well, but it has an additional restriction: only one JavaScript file can be downloaded at a time, even if the files come from different hostnames or domains. Consequently, including multiple JavaScript files in the head of your document can have a huge effect on both the perceived and actual load times of your pages.

If at all possible, move JavaScript includes to the bottom of your document:

<!-- document -->

<script src="//ajax.googleapis.com/ajax/libs/jquery/1.4.3/jquery.min.js"></script>

<script>window.jQuery || document.write("<script src='/js/jquery-1.4.3.min.js'>\x3C/script>")</script>

<script src="/js/main.js"></script>

</body>Compress!

My final recommendation for improving the speed with which this page loads would be to apply compression wherever possible.

The first place to start would be compressing text files (HTML, CSS, and JavaScript) on the server end with gzip. Gzip compression works by finding repeated strings—such as whitespace, tags, style definitions and the like—within a text file and replacing them temporarily with a smaller symbol. These symbols can then be used to swap the strings back to their original form once the browser has received the file. All modern browsers support gzip compression, so it’s a no-brainer for compressing content.

The next step to decreasing the size of your files would be to use image compression tools like those found in Photoshop’s “Export for Web and Devices” and online tools like Yahoo!’s Smush.it and PunyPNG. There are lots of resources out there that discuss image compression and optimization, but as this page makes use of PNGs, I’d recommend simply running them through one of the online services or Smusher, a command-line interface to Smush.it and PunyPNG. If you’re on a Mac and aren’t a big fan of the command line, ImageOptim is another option.

Finally, I’d recommend creating compressed versions of every text file. Even though gzip will help a lot, it works even better if your files are already pretty small. For HTML and CSS files, where all you really need is whitespace removal, I’d opt for something along the lines of what Mike Davidson promoted back in 2005: using global prepend & append to build an output buffer you can compress using regular expressions. The nice thing about that approach is that you can leave your beautifully-indented original work untouched. And if you’re really inspired, you could even cache that result for a little server-side optimization.

On the JavaScript side of things, it gets a little trickier. Bad things can happen when you strip the whitespace from JavaScript files that opt not to use semicolons (;) and curly braces ({}). That’s when the YUI Compressor proves itself invaluable. It reads your JavaScript files (and CSS for that matter) then optimizes the file so that it won’t break when compressed.

Conveniently, Reduce acts as a wrapper around Smusher, PunyPNG, and YUI Compressor, making it a great one-stop shop for command-line compression.

As the table below shows, after downloading a copy of the English Lighthouses page and spending about 10 minutes using some of these tools, I was able to drop the overall size of the page (and its assets) from around 3.4MB down to 2.5MB. That’s a nearly 30% savings before gzip even comes into play. With a little more time, I’m sure the files could be squished even more.

| Before | After | |||

|---|---|---|---|---|

| Requests | Size (in Kb) | Requests | Size (in Kb) | |

| Totals | 90 | 3,432.9 | 87 | 2,490.9 |

| HTML/Text | 1 | 22.3 | 1 | 20.4 |

| CSS | 3 | 22.9 | 1 | 21.1 |

| JavaScript | 3 | 48.4 | 2 | 21.6 |

| Images | 83 | 3,339.3 | 83 | 2,427.8 |

Going the Extra Mile

On gallery pages similar to the page in question, images form the bulk of the payload that needs to be sent over the wire. In this particular example, images make up over 97% of the bits required to render the page. On top of increasing the overall size of the page, each of those images requires an additional connection to the server. This page alone requires an extra 83 connections to the server in order to download all of the images. When you factor in the reality that most browsers only allow between 2 and 6 simultaneous connections to a given domain, you can bet the browser is going to spend a lot of time twiddling its thumbs, waiting to download the images, regardless of how small they are. It also means a ton of additional requests, making it more difficult for the server to handle heavy traffic spikes.

To address these issues, the Internet Engineering Task Force (IETF) created data URIs, a means of embedding image data into a text file (typically an HTML or CSS file). A data URI is typically a Base 64-encoded representation of binary data, so it looks like a bunch of gibberish, but the format breaks down like this:

data:[MIME-TYPE][;charset=ENCODING][;base64],DATA

Data URIs tend to be quite a bit larger than binary image files (typically about 30% larger) and older browsers (e.g. IE7 and below) don’t understand them. However, on image-heavy sites, they can greatly reduce the number of times the server must be queried to download a resource. Plus, as text, the data URI-based images can be gzipped as well.

If you’re interested in using data URIs without having to worry about older versions of IE, Google’s mod_pagespeed for Apache is a great tool. It determines whether or not data URIs are acceptable to a given browser and alters the content accordingly. It’s pretty easy to set up too; in fact, we got it up and running here on the Sherpa site in under 10 minutes.

Pitfalls to Avoid

- Don’t require your users to download more than one or two JavaScript or CSS files without good reason.

- Don’t develop a laissez-faire attitude when it comes to source order.

Things to Do

- Combine your CSS and JavaScript files whenever possible.

- Move your JavaScript to the bottom of your document.

- Compress everything.

- If you have an automated build or release process for your site, find a way to automate the file compression and image optimization as a step in that process.

Further Reading

- “14 Rules for Faster-Loading Web Sites”, High Performance Web Sites, 18 September 2007

- “Design Fast Websites”, Yahoo! Frontend Engineering Summit, 15 October 2009

- “High Performance JavaScript”, Web Directions, 23 September 2010

Do you optimize your sites? If so, why? If not, why not?